I have to be honest. This blog post changed multiple times over the course of a few days. In earlier versions, I joked about predictions that generative AI could lie to us. That it could destroy our jobs. That it could destroy us.

And then news broke that at least one employer will not be filling 7,800 back-office jobs because it believes generative AI can get enough of the tasks done in a satisfactory way. Ouch.

So maybe generative AI might destroy lives after all? Or maybe it could boost demand for high-level knowledge work. That would be a good way to harness human potential, right?

Grappling with these types of questions will keep researchers, artists, and a good chunk of the 100 million or so users of OpenAI’s products busy. For now, the Leff team has decided to take a deep breath and focus on a much smaller question: How much could generative AI help us do our work—telling good stories in service of (business) goals? (My colleague Scott posted earlier about ChatGPT’s performance as a research tool, as a builder of arguments, and as a songwriter—oy.)

Without putting client information into any generative AI tools, members of the Leff team ran some basic experiments to see what generative AI tools would give us if we asked them to do some of the tasks that we do for work. We found that, for now, generative AI is neither a destroyer of worlds nor rocket fuel for knowledge work. Here’s a decidedly nonexhaustive sampling of our findings, from surprisingly good to mind-bendingly boring.

Transcription: “Spooky but useful”

My colleague Ugnė found that a tool such as Descript will transcribe an audio file with “pretty solid but not 100 percent perfect” punctuation. The tool also flags words that it may have misunderstood, which can be handy. So far so typical for any discussion of generative AI.

The tool raised eyebrows, however, when it allowed Ugnė to edit the audio file by editing the transcription. Delete a sentence from the transcript, delete it from the audio file, as well. The tool can even add words to the audio file using a synthetic Ugnė voice.

UX wireframes: Workable first drafts

As is the case with many generative AI tools, UX tools powered by generative AI are fast. That is, they produce mediocre outputs quickly. The human’s job is to interpret and guide the AI tool to better iterations.

Ugnė was able to use the tool to generate basic layouts, but noted that a human would still need to iterate and adjust many elements of the layout: images, copy, color themes, typography—the elements of visual branding. The AI tool’s outputs are also static. Any buttons or interactive elements on a page would still need to be built by hand.

Voiceovers: Not a drama school grad

I’ll get this out of the way now: Yes, an AI has replicated Ugnė’s voice reading a scene from Mad Men. Yes, “she” sounds uncanny. But so does the stock voice that comes with the tool.

Here’s the problem: Both AI-generated voices sound bored. Vacant. Like if you told an indifferent civilian to audition for drama school on the spot using a script they’ve never seen before. Users can choose from different tones of voice, but so much of expressive speech comes from the infinite variations in cadence and tone that it may be easier—and certainly more natural—to just have a competent actor do a voiceover unless the tool becomes much more intuitive to direct.

Where AI-generated voices can be useful, though, is when recordings of voices contain human- or technology-generated imperfections. Did a speaker use the wrong word? Does the audio cut out briefly because of a faulty file? Patch in what the speaker should have said with an AI clone of their voice—if they consent to cloning their voice. Did a speaker utter a clumsy sentence? That would be pushing the use case, but it could be worth trying.

First drafts: It’s something, but it’s boring

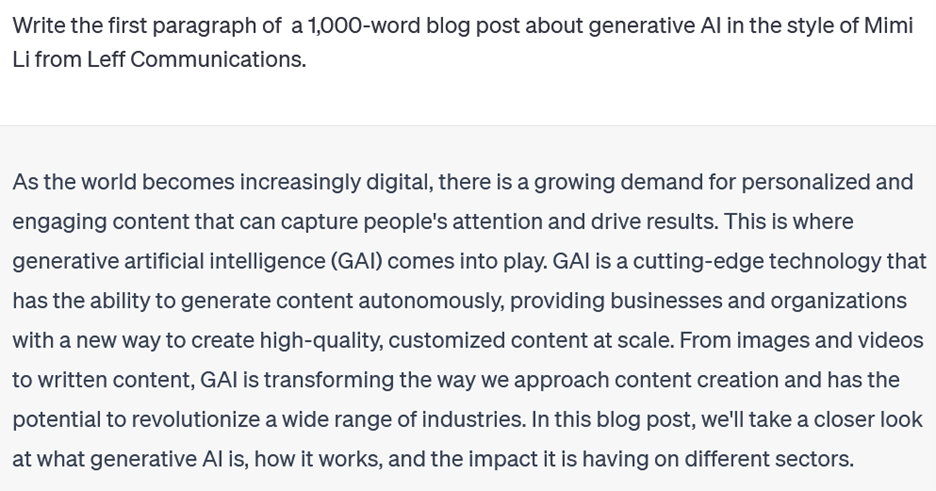

It’s boring—generic, uninspired—to say that AI-generated text is boring. I prompted ChatGPT to write the first paragraph of a blog post about generative AI in the style of Mimi Li from Leff Communications. The output almost made me weep with indignation:

So this is what generative AI thinks of me. This output could’ve been written by a dutiful but indifferent student who nonetheless manages to pull an A–.

***

I should lower my expectations of generative AI. Its whole schtick is that it’s dutiful but indifferent. Maybe that’s why some employers are outsourcing some of their low-level work to it.

When it comes to the work that the Leff team does, generative AI tools democratize the most basic parts of creative tasks. We will continue to investigate generative AI tools’ use cases in our work. In the process, we’ll refine and share our philosophy on the responsible use of generative AI in our work—if it has one at all for the time being. More destabilizing findings await us all.

Leave a Reply

You must be logged in to post a comment.